Click here to go see the bonus panel!

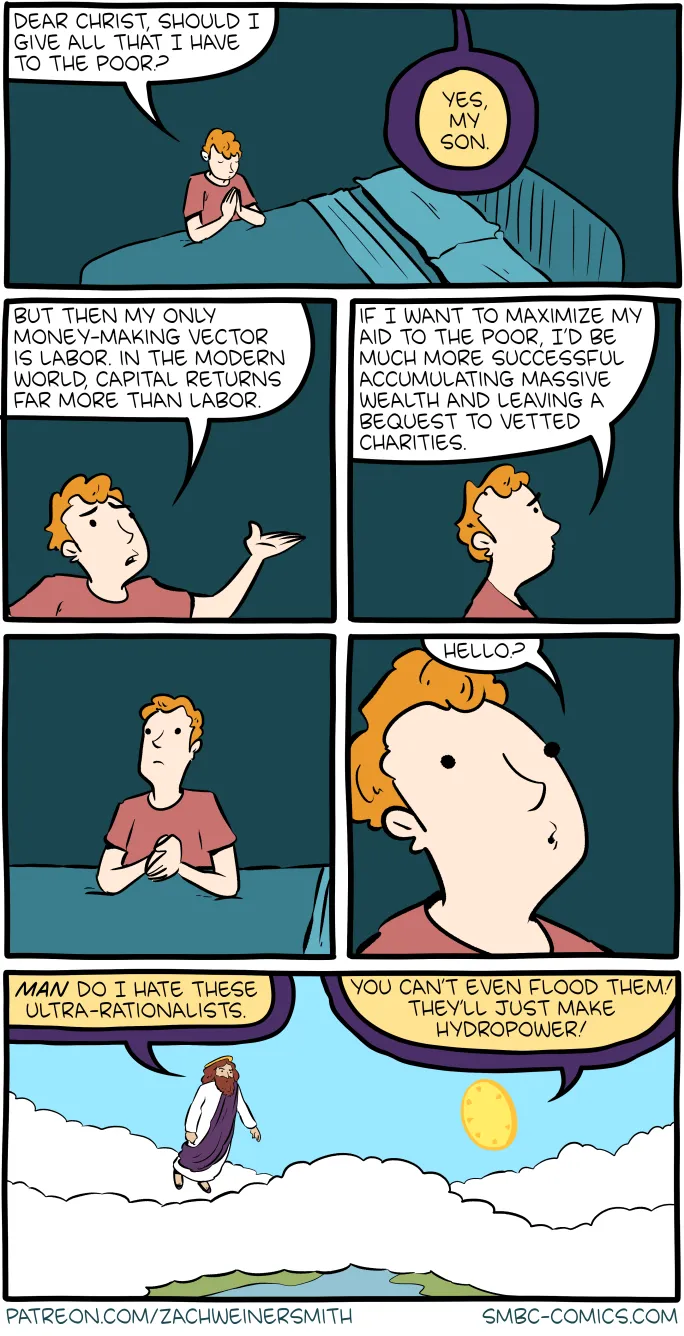

Hovertext:

How come nobody ever prays for sound investment advice?

Today's News:

Thumb Score: +18

Amazon has Oregon E72 PowerCut Replacement Chainsaw Chain for 20" Guide Bars on sale for $11.98. Shipping is free w/ Prime or on $35+ orders.

Thanks Deal Hunter TattyBear for sharing this deal

Features:[LIST][*]Replacement chain for 20" (51cm) guide bar. Drive Links: 72, Pitch: 3/8", Gauge: .050". For chainsaw sizes 50-100 cc

Thanks Deal Hunter TattyBear for sharing this deal

Features:[LIST][*]Replacement chain for 20" (51cm) guide bar. Drive Links: 72, Pitch: 3/8", Gauge: .050". For chainsaw sizes 50-100 cc

Click here to go see the bonus panel!

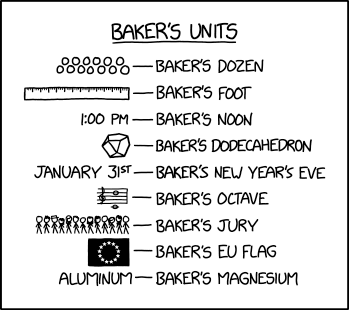

Hovertext:

The only downside is you have to go in every six months to get spun up.

Today's News:

Next Page of Stories